Light Floor

Interactive Floor

Client: WNDR Museum

Role: Technical Director, Exhibit Design and Development

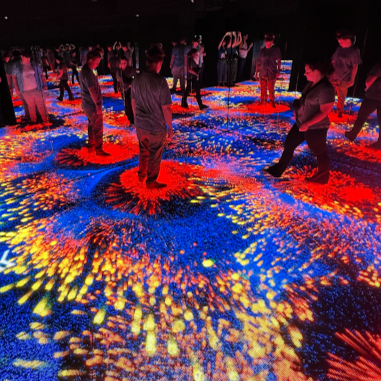

The Light Floor is WNDR Museum’s staple attraction at all of its locations. The Lightfloor encapsulates the WNDR experience: multi sensory works of art that awaken and evolve with human interaction. The visitors dance, explore, and reflect upon the infinity created by mirrors on the walls and floor. The floor is comprised on many LED panels, much like your typical LED screen you might see at a concert, however they have been designed to withstand direct impact from people standing, jumping, laying on the panels. The LED panels have a unique feature which enables the LightFloor to see the location of peoples shoes, bodies and touch. There are hundreds of thousands of tiny optical sensors which determine the exact location of everyone on the LightFloor. The visualizer displayed on the floor reads all of this sensor data really fast (33 milliseconds or 1/30th of a second) and then changes the visualizer based on the location of people on the floor.

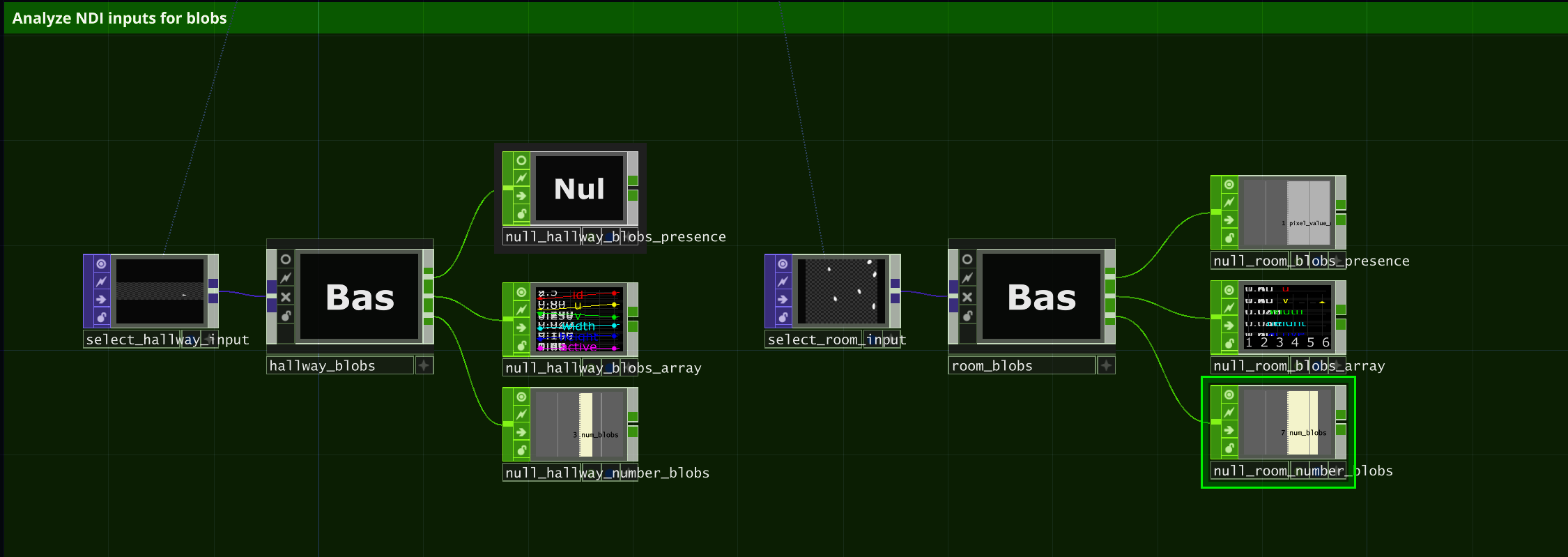

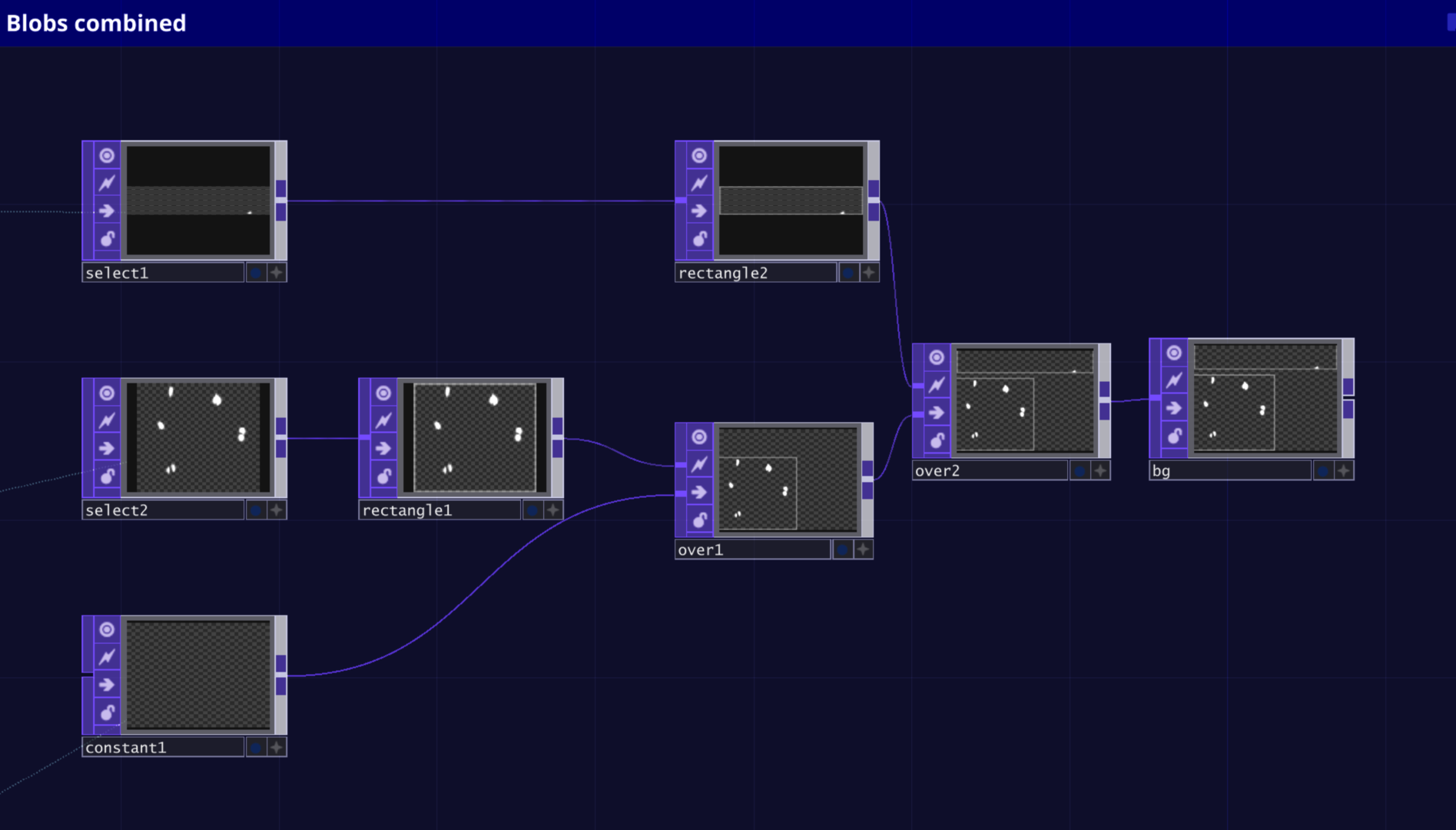

For each floor, at different locations, a custom made TouchDesigner app was developed for creating uniqie visual experience. The data sent from the sensors are visualized using as blob tracking technology. Then the positional data is used in shaders to create real time visual effects such as colorful particle and fluild systems. Multiple looks were built for the floors across all museums. Each simulation presented a different experience.

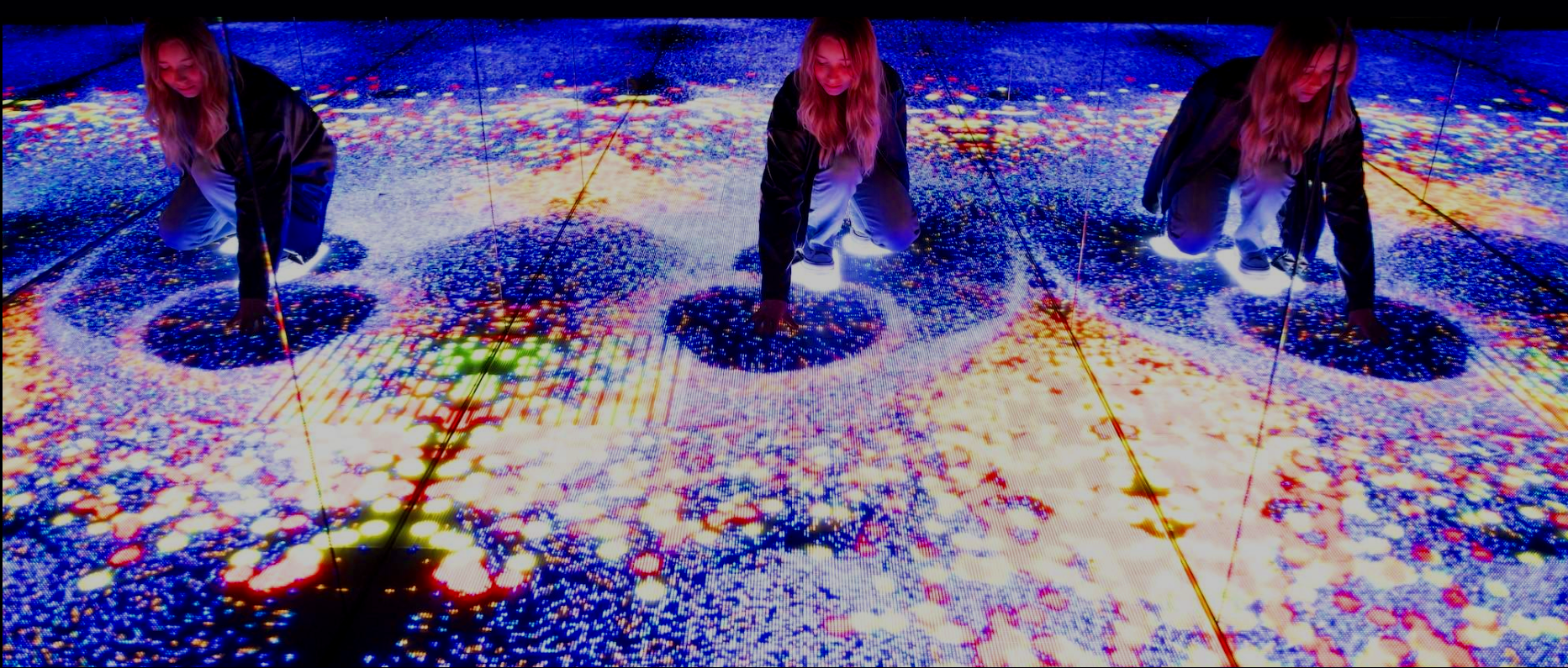

Several visual iterations were explored during the development process to experiment with different styles of interaction and texture. The following images showcase a range of looks created using real-time GPU-driven simulations, including a compute shader based particle system, an ice crack simulation, and a fluid dynamics simulation. Each visual approach was designed to test how movement could generate unique light based feedback, helping to refine the final aesthetic and behavioral qualities of the interactive floor.

Sensor data from Brightlogic’s custom interactive panels was streamed via NDI into TouchDesigner, where a blob-detection algorithm extracted touchpoint positions. These blobs not only drove interaction logic but also generated a stylized visualization that elevated the low-resolution input into a more legible and expressive output.

Visual Development

Multiple visual prototypes were developed to calibrate color values across PC displays and LED panels, with render resolutions tailored to room dimensions. Designed to ignite wonder and awe, these visuals form the first visitor experience and were crafted to deliver immediate impact. Below are selected design iterations.